I assume these things can scrape Lemmy too?

They don’t need to scrape Lemmy. They just need a federated instance and then they have literally everything you post delivered to them as part of the way Lemmy is designed.

Please understand literally nothing on Lemmy is private.

Not even this super secret messages I’m sending you?

Put a public pgp key in your profile bio, then you can actually send true end to end encrypted messages over insecure public channels.

A very similar conversation led to a joke chain of pgp encrypted replies between me and some other rando on Reddit a few years ago. We were both banned.

Darwin machine, pt. 2

I just checked and they actually disabled AI Overview. LMAO

suicAId

SuicAid, the solution to your problems with suicide.

Ah yes. Because that one Reddit users option holds equal weight to the thousands of professionals in the eyes of an LLM

This is gonna get worse

before it gets better.Then it’ll get worse again.

I’d say it’s not the LLM at fault. The LLM is essentially an innocent. It’s the same as a four year old being told if they clap hard enough they’ll make thunder. It’s not the kids fault that they’re being fed bad information.

The parents (companies) should be more responsible about what they tell their kids (LLMS)

Edit. Disregard this though if I’ve completely misunderstood your comment.

Yeah that’s my point, too. AI employing companies should be held responsible for the stuff their AIs say. See how much they like their AI hype when they’re on the hook for it!

I mean - I don’t think anyone’s solution to this issue would be to put an AI on trial… but it’d be extremely reasonable to hold Google responsible for any potential damages from this and I think it’d also be reasonable to go after the organization that trained this model if they marketed it as an end-user ready LLM.

I’d say it’s more that parents (companies) should be more responsible about what they tell their kids (customers).

Because right now the companies have a new toy (AI) that they keep telling their customers can make thunder from clapping. But in reality the claps sometimes make thunder but are also likely to make farts. Occasionally some incredibly noxious ones too.

The toy might one day make earth-rumbling thunder reliably, but right now it can’t get close and saying otherwise is what’s irresponsible.

Sorry, I didn’t know we might be hurting the LLM’s feelings.

Seriously, why be an apologist for the software? There’s no effective difference between blaming the technology and blaming the companies who are using it uncritically. I could just as easily be an apologist for the company: not their fault they’re using software they were told would produce accurate information out of nonsense on the Internet.

Neither the tech nor the corps deploying it are blameless here. I’m well aware than an algorithm only does exactly what it’s told to do, but the people who made it are also lying to us about it.

Sorry, I didn’t know we might be hurting the LLM’s feelings.

You’re not going to. CS folks like to anthropomorphise computers and programs, doesn’t mean we think they have feelings.

And we’re not the only profession doing that, though it might be more obvious in our case. A civil engineer, when a bridge collapses, is also prone to say “is the cable at fault, or the anchor” without ascribing feelings to anything. What it is though is ascribing a sort of animist agency which comes natural to many people when wrapping their head around complex systems full of different things well, doing things.

The LLM is, indeed, not at fault. The LLM is a braindead cable anchor that some idiot, probably a suit, put in a place where it’s bound to fail.

Clearly fake: LLM don’t mind citing their sources 😂

Lemmy would’ve suggested bean diet

also suggesting that those beans could have been found in a nebula, dick

Or not pooping for days

3 days of intensive treatment. That’ll sort ya

Feeling suicidal? No. Okay then try an all bean diet and see if it helps.

And installing Linux and axe-murdering anyone with a car.

Or installing Linux. You’ll still be depressed, but at least you have a good reason now.

And you have a lot to (look forward to) do which might actually help

You don’t have time to be depressed when you’re trying to fix xorg.conf. (yeah, I know, super dated reference, Linux is actually so good these days I can’t find an equivalent joke).

Editing grub.cfg from an emergency console, or running grub-update from a chroot is a close second.

Adding the right Modeline to xorg.conf seemed more like magic when it worked. 🧙🏼

I’ve seen things you people wouldn’t believe… CRTs on fire from an xorg.conf typo… I configured display servers in the dark cause the screen was black… All those moments will be lost in time, like tears in rain… Time to switch to Wayland.

You haven’t lived until you’ve compiled a 3com driver in order to get token ring connectivity so you can download the latest kernel source that has that new ethernet thing in it.

You’ll also get knee-socks so win/win

I’ve been using Linux for 17 years but they still didn’t issue me my pair of knee-socks. WTF?

Idk, are you using arch? There seems to be a correlation from what I’ve gathered from Lemmy

haha I really like Linux but that’s funny and somehow also true😂

Is it any wonder these businesses are SO successful

I can’t wait to see my old horny comments about anime girls come up.

Idk seems more helpful then the suicide hotline number. Called them many times for them to tell me generic same information and often times hug up on if I started to cry.

At this point I can’t tell the joke ones from the real ones.

It’s all a joke

And now I’m thinking of the comedian from Watchmen. Alan Moore, knows the score…

Neither can ChatGPT

I’d call that karma for Reddit and Google.

The thing these AI goons need to realize is that we don’t need a robot that can magically summarize everything it reads. We need a robot that can magically read everything, sort out the garbage, and summarize the the good parts.

Good luck ever defining “good”.

good = whatever increases the stocks value

/j

No joke there, you’re completely correct.

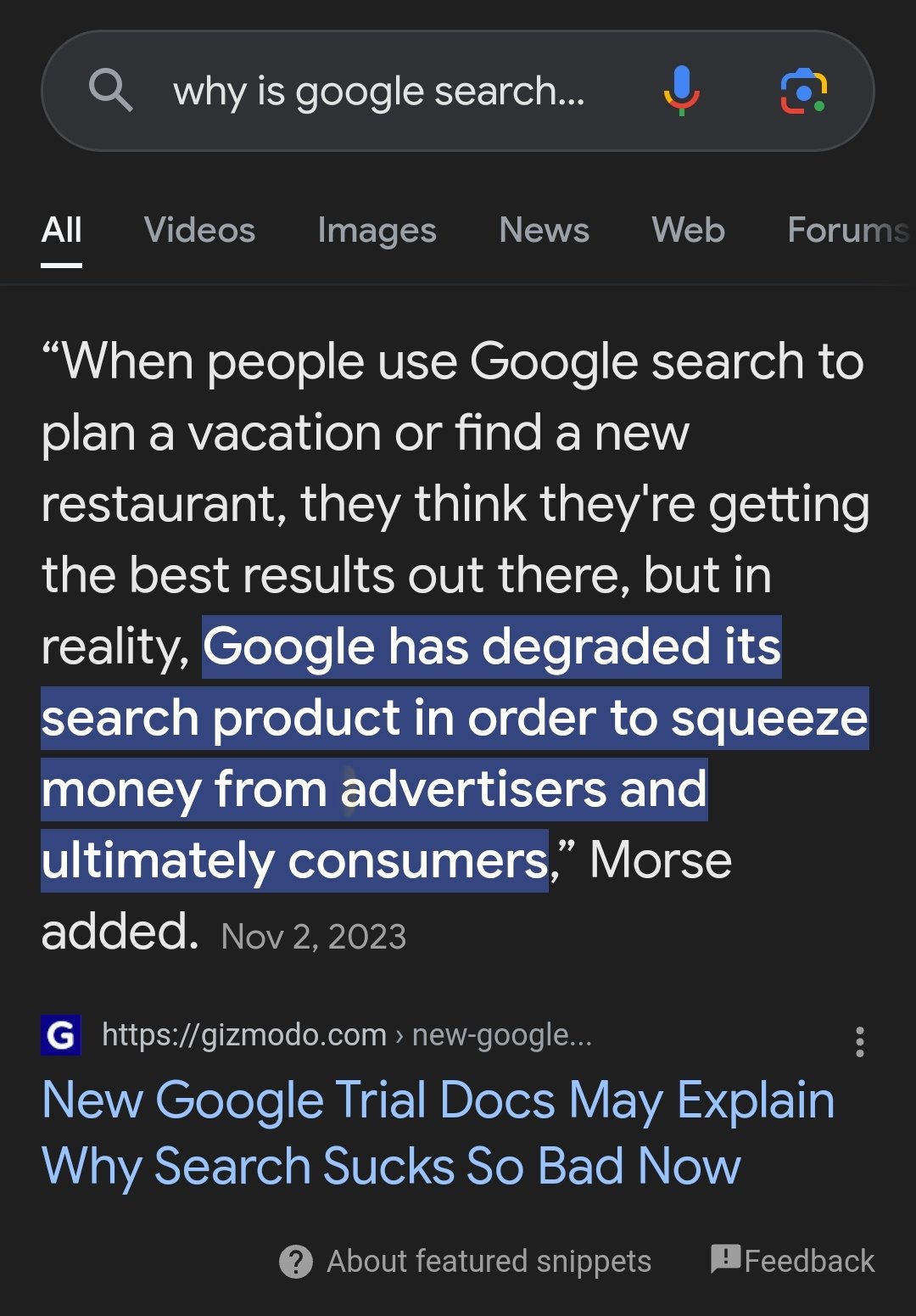

The thing is that that was how Google became so big in the first place. PageRank was a cool way of trying to filter out the garbage and it worked real well. Even my non techy friends have been getting frustrated with search not working like it used to (even before all this Gemini stuff was added)

I just asked Google why it’s search is complete shit now? At least it isn’t being biased, lol 🤪

Thas not the AI though, thats a snippet from an article about how google search is shit now

Yeah, when Google starts trying to manipulate the meaning of results in it’s favour, instead of just traffic, things will be at a whole other level of scary.

One reddit user suggests LOREM IPSUM DOLOR SIT AMET